In the framework of the GEP v2 evolutions, part of a formal acceptance review with the European Space Agency (ESA), Terradue has successfully demonstrated new GEP capabilities for Cloud Computing infrastructure independence and auto-scaling, thanks to the multi-tenant cluster component, release.

During the demonstration, data processing jobs from GEP InSAR and optical services have been submitted and ran on different, independent Cloud infrastructures such as EGI.eu and cloudferro.com (IPT) as well as on Terradue’s private Cloud. Resources from Amazon Web Services (AWS) are also being used for testing and benchmarking some services. In addition, the capability of the platform to dynamically “scale out” and “scale in” according to the processing demand from users has been shown with the INGV STEMP service, running on the multi-tenant cluster exploiting IPT computing resources.

Background: the multi-tenant cluster component

The multi-tenant cluster component delivers a Production Center as-a-Service, that provisions a cluster with the full Hadoop YARN stack. A unified and automatic deployment process is ensured by the Cluster Manager console, that administers hadoop cluster at any scale. It installs the selected elements of the processing stack (Hadoop, YARN, Oozie, Docker…) in minutes, and ensures optimal settings, as well as configuration and security management from a single console.

A cluster is dynamic when the cluster capacity can be scaled horizontally. This means that nodes can be added or removed on the fly, based on the cluster load and scheduled processing. The Capacity Manager works in conjunction with the Cloud Controller to add or remove nodes from the cluster, based on the contractual requirements for quality of service, translated and monitored as part of Service Level Agreements (SLA).

Adopting Containers

The multi-tenant cluster allows to launch Docker containers directly as execution containers. Basically, this solution lets the service providers package their applications, and all of the software dependencies, into a Docker container. It provides a consistent environment for execution, and also provides isolation from other applications or softwares installed on a given host. Processing jobs are therefore running in an isolated computing environment. The application release process from Development to Operation becomes transparent and straightforward. Docker containers also allow to exploit multi-tenant clusters for a better cost effectiveness, by isolating runtime environment for each user application on the same production cluster.

Cost optimized provisioning for on-demand processing

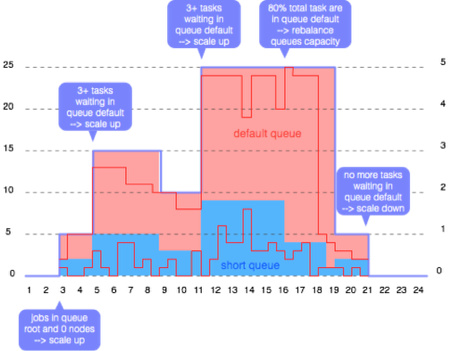

The following diagram illustrates the completeness of the solution, describing a situation where the system ensures cost effectiveness during peaks of processing requests. Moreover, operators can configure specific processing load thresholds, in order to manage the auto-scaling flow.

Users may have unpredictable processing needs over a given time period. Using the containers approach described in the previous sections, it has been demonstrated how the Capacity Manager may reconfigure on the fly the cluster capacity (cf. figure below).

Another important point to notice in the provided example, is the fact that the Capacity Manager ensures to keep an active node as available for processing needs within a consumed hour. This is important for cost effectiveness, especially in the case of cloud bursting over a public cloud provider, for which every hour of a node consumed partially is anyway accounted as complete.

GEP welcomes new partnerships to collaborate on adding processing services to the Platform’s services portfolio.

You can contact us by replying to this topic, or by emailing to the ‘contact’ link here.