Usually, when a job fails in the sandbox or in production, you like to know the reason. As a service provider, you can report information about the failing workflow. In this topic, we will explain how to handle the errors and properly report the exception to the end user.

Processing logging

Logging during processing is essential because it allows you to debug the workflow execution to find out easily the origin of the error. This logging is also used by the framework to report errors to the end user.

The last call to ciop-log command in your processor code is used to report the exception in case of failure

For instance, here is the stderr of a node execution in the workflow

2017-06-08T14:51:52.916266 [INFO ] [user process] Start processing.

2017-06-08T14:51:53.597069 [INFO ] [user process] Output option: geotiff

2017-06-08T14:51:53.597174 [INFO ] [user process] Looping over all inputs...

2017-06-08T14:51:53.898278 [INFO ] [user process] input #1: https://catalog.terradue.com//sentinel2/search?format=json&uid=S2A_MSIL1C_20170521T100031_N0205_R122_T31PFM_20170521T101053

2017-06-08T14:51:53.898400 [INFO ] [user process] requesting enclosure: opensearch-client "https://catalog.terradue.com//sentinel2/search?format=json&uid=S2A_MSIL1C_20170521T100031_N0205_R122_T31PFM_20170521T101053" enclosure

2017-06-08T14:51:57.329535 [INFO ] [user process] Downloading input at https://store.terradue.com/download/sentinel2/files/v1/S2A_MSIL1C_20170521T100031_N0205_R122_T31PFM_20170521T101053

2017-06-08T14:52:26.511613 [INFO ] [user process] start processing 'S2A_MSIL1C_20170521T100031_N0205_R122_T31PFM_20170521T101053.zip'

2017-06-08T14:52:28.172522 [ERROR ] [user process] Error processing S2A_MSIL1C_20170521T100031_N0205_R122_T31PFM_20170521T101053.zip : division by zero

2017-06-08T14:52:28.172573 [INFO ] [user process] ret_code main: 1

2017-06-08T14:52:28.172676 [ERROR ] [user process] Processing S2A_MSIL1C_20170521T100031_N0205_R122_T31PFM_20170521T101053 ended with an error : division by zero

java.lang.RuntimeException: PipeMapRed.waitResultThreads(): subprocess failed with code 1

We see that the processing script uses ciop-log to report information during runtime.

The scripts exits with code error 1 and thus in this failing processing case, the last ciop-log call is reporting a processing issue.

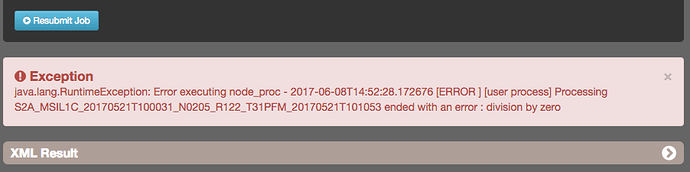

This error is reported at end user via the portal as an exception as follow

Aggregated error handling

Your workflow can potentially run in parallel. In the case you do not want your entire workflow to be ended at the first input that fails, it is interesting to have a dedicated node for handling the errors at the end of all the input processed and evaluate the errors that occurred during this parallel processing phase.

To do so, make sure your script does not exit with an error when it encounters an issue but instead write and publish a file with the error. For instance, in a bash script it could be a set of commands like this

...

myproc $input | tee $input.log

# check the main processor exit code and publish to next node accordingly

if [[ $? != 0 ]]; then

# publish processing log for next node evaluation

ciop-publish -a $input.log

echo "$input ERROR" | ciop-publish -s

else

echo "$input OK | ciop-publish -s

fi

...

In that case, the processing node running processes all inputs and simply passes the input processing status to the next node.

This error assessment node is defined like the following in the application.xml

...

<jobTemplate id="query">

<streamingExecutable>/application/query/run</streamingExecutable>

<defaultParameters>

<parameter id="errorpct" scope="runtime" maxOccurs="1" title="Error percentage threshold" abstract="Above this percentage of error, the job is considered as failed">

</defaultParameters>

<defaultJobconf>

<property id="ciop.job.max.tasks">1</property>

</defaultJobconf>

</jobTemplate>

...

This job has a simple parameter to set the percentage of input that failed above which a workflow is failed.

Please note here the ciop.job.max.tasks property set to 1 that defines that the node is not executed in parallel.

This node could typically loop over all the previous nodes input and fail the entire workflow if the percentage is reached.

...

while read input status; do

if [[ "$status" == "OK" ]]; then

let k=$k+1

fi

let t=$t+1

done

let pct=($k*100/$t)

if [[ $pct -lt $threshold ]]; then

ciop-log ERROR "$pct% of the input failed"

exit 32

fi

exit 0

This simple node will exit if the percentage of successful input processed is not reached.